Valerie Reyna, the Lois and Melvin Tukman Professor of Human Development, is leading Cornell’s contribution to a multi-institutional effort supported by the ³Ô¹ÏÍøÕ¾ Science Foundation (NSF) that will develop new artificial intelligence technologies designed to promote trust and mitigate risks, while simultaneously empowering and educating the public.

Funded by a $20 million award from the NSF and led by the University of Maryland (UMD), the Institute for Trustworthy AI in Law and Society (TRAILS) unites specialists in AI and machine learning with social scientists, legal scholars, educators and public policy experts.

Valerie Reyna

Reyna, as the principal investigator of the Cornell component, will use her expertise in human judgment and cognition to advance efforts focused on how people interpret their use of AI. She will conduct research at Cornell that builds on her innovative theory of human psychology and decision-making and her prior NSF-funded research on how misinformation on social media shaped decision-making around COVID-19.

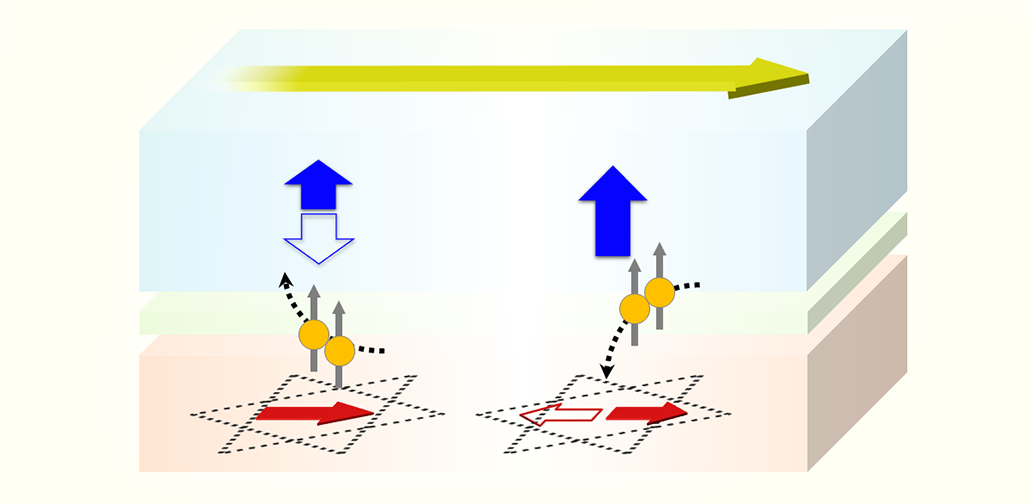

One of the pillars of the proposed research of the institute is Reyna’s “fuzzy-trace” theory, which is built on the concept of gist – representations that capture the essence of information, as opposed to details such as exact words or numbers. Her findings demonstrate that science communicators and other representatives need to connect their messages to social values and emotion to change behavior so that they can rely on meaningful interpretations of statistics and factual information. Contrary to other approaches, fuzzy-trace theory rejects the idea that communicators need to choose between conveying emotions or facts – .

“The distinction between mental representations of the rote facts of a message – its verbatim representation – and its gist explains several paradoxes, including the disconnect between knowing facts and yet making decisions that seem contrary to those facts,” Reyna said.

In addition to UMD and Cornell, TRAILS will include faculty members from George Washington University and Morgan State University, with additional support from the ³Ô¹ÏÍøÕ¾ Institute of Standards and Technology and private sector organizations including the Dataedx Group, Arthur AI, Checkstep, FinRegLab and Techstars.

The multidisciplinary team will work with impacted communities, private industry and the federal government to determine what trust in AI looks like, how to develop technical solutions for AI that people can trust, and which policy models best create and sustain trust.

The catalyst for establishing the new institute is the consensus that AI is currently at a crossroads. AI-infused systems have great potential to enhance human capacity, increase productivity, spur innovation and mitigate complex problems, but today’s systems are developed and deployed in a process that is opaque and insular to the public, and therefore, often untrustworthy to those affected by the technology. To develop trustworthy AI that meets society’s needs, understanding the psychology of the human user is essential.

Inappropriate trust in AI can result in many negative outcomes, sa9d Hal Daumé III, a UMD professor of computer science who is lead principal investigator of the NSF award and will serve as the director of TRAILS. People often “overtrust” AI systems to do things they fundamentally cannot. This can lead to people or organizations giving up their own power to systems that are not acting in their best interest. At the same time, people can also “undertrust” AI systems, leading them to avoid using systems that could ultimately help them.

Given these conditions – and the fact that AI is increasingly being deployed to mediate society’s online communications, determine health care options and offer guidelines in the criminal justice system – it has become urgent to ensure that trust in AI systems matches their levels of trustworthiness.

“My research with TRAILS will combine cognitive, social and information science approaches to ensure that diverse human voices and values shape the future of AI modeling,” Reyna said. “The fuzzy-trace theory predicts fundamental mismatches between human and artificial intelligence that must be bridged to achieve trustworthy AI systems.”