Over several months, the Australian, state and territory governments have worked to align how we approach assurance of AI in government. Learn about what was involved and what happens now.

Today, the Data and Digital Ministers Meeting (DDMM) has agreed to, and released, the ³Ô¹ÏÍøÕ¾ framework for the assurance of artificial intelligence in government, continuing governments’ commitment to putting the rights, wellbeing and interests of people first.

While AI (artificial intelligence) technologies have been used for decades, general-use capabilities – such as generative AI – have made their way into everyday tools within the space of a couple of years.

Governments recognise that, to embrace the opportunities of AI, they must gain the public’s confidence and trust regardless of state and territory borders or levels of government.

The national framework is the product of nearly a year’s collaboration between Australia’s governments. This is how it came about and what happens from here.

Bringing together a consistent national approach

In June 2023, Data and Digital Ministers agreed to work towards a nationally consistent approach to the safe and ethical use of artificial intelligence by governments.

A cross-jurisdiction working group, chaired by the Commonwealth and NSW Governments, began work to align each jurisdiction’s approach while accounting for their unique circumstances, responsibilities, and structures of government. The (Digital NSW, 2022), one of the first in the world, was used as a baseline to ultimately develop a national framework.

‘This initiative is a great demonstration of collaboration between the Australian, state and territory governments,’ said Lucy Poole, co-chair of the working group and general manager of the Digital Transformation Agency’s Strategy, Planning and Performance division.

‘We all look forward to continue this collaboration and share our learnings as we apply and improve our AI assurance processes.’

‘No matter where you live or do business in Australia, your governments are able to apply this vital assurance from an even and consistent baseline.’

Looking inside the framework

The national framework recommends practices and cornerstones of AI assurance, an essential part of broader AI governance in the public sector.

Practices

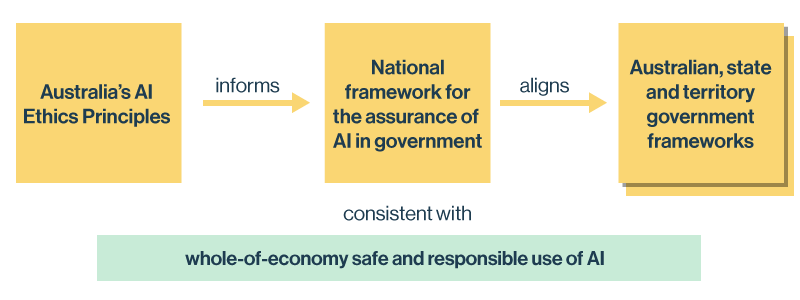

The framework’s practices are the crux of the framework, demonstrating how governments can practically apply those principles. From ‘maintain reliable data and information assets’ to ‘comply with anti-discrimination obligations’, each practice is aligned to one of (DISR 2022).

Cornerstones

The practices are complemented by 5 assurance cornerstones: governance, data governance, standards, procurement, and a risk-based approach.

These mechanisms often already exist within governments and have been identified as valuable enablers for practicing the assurance of AI.

Case studies and resources

Several case studies share different governments’ learnings from previous experience, such as the intersection of AI and recordkeeping with transparency and explainability. The many resources which informed the framework are also listed and linked for everyone’s benefit.

You can explore the national framework or download a PDF copy on the Department of Finance website.

Putting the framework into practice

As hinted to above, each government holds different responsibilities and organises how they deliver services in distinct ways. Differences in population, geography and economies can also impose unique priorities.

To account for this, the Australian, state and territory governments will develop assurance processes that work for their needs but ultimately align to the national framework. This offers measurable confidence to all people and business that any government’s adoption of AI fulfils Australia’s AI Ethics Principles.

For its part, an Australian Government AI assurance framework will be developed and piloted by the Digital Transformation Agency (DTA).

The national framework is one of many initiatives by Australia’s governments as exemplars of the safe and responsible use of AI.