A group of researchers has successfully demonstrated automatic charge state recognition in quantum dot devices using machine learning techniques, representing a significant step towards automating the preparation and tuning of quantum bits (qubits) for quantum information processing.

Semiconductor qubits use semiconductor materials to create quantum bits. These materials are common in traditional electronics, making them integrable with conventional semiconductor technology. This compatibility is why scientists consider them strong candidates for future qubits in the quest to realize quantum computers.

In semiconductor spin qubits, the spin state of an electron confined in a quantum dot serves as the fundamental unit of data, or the qubit. Forming these qubit states requires tuning numerous parameters, such as gate voltage, something performed by human experts.

However, as the number of qubits grows, tuning becomes more complex due to the excessive number of parameters. When it comes to realizing large-scale computers, this becomes problematic.

“To overcome this, we developed a means of automating the estimation of charge states in double quantum dots, crucial for creating spin qubits where each quantum dot houses one electron,” points out Tomohiro Otsuka, an associate professor at Tohoku University’s Advanced Institute for Materials Research (WPI-AIMR).

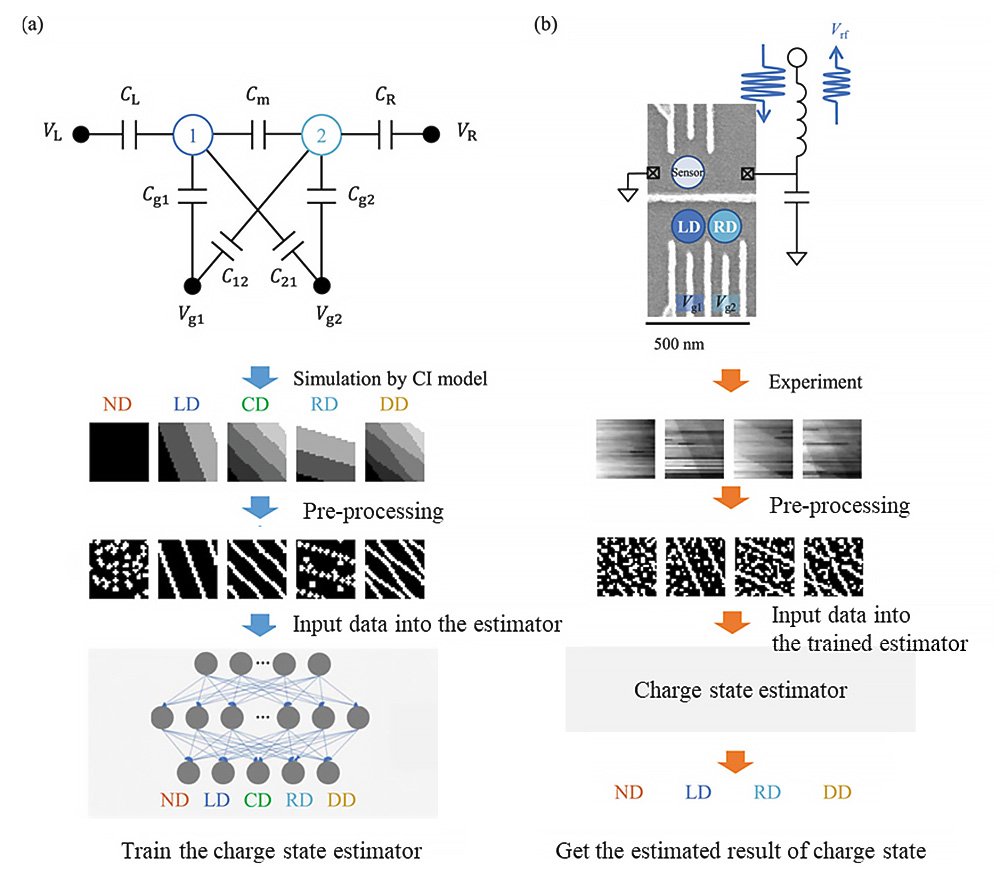

Using a charge sensor, Otsuka and his team obtained charge stability diagrams to identify optimal gate voltage combinations ensuring the presence of precisely one electron per dot. Automating this tuning process required developing an estimator capable of classifying charge states based on variations in charge transition lines within the stability diagram.

To construct this estimator, the researchers employed a convolutional neural network (CNN) trained on data prepared using a lightweight simulation model: the Constant Interaction model (CI model). Pre-processing techniques enhanced data simplicity and noise robustness, optimizing the CNN’s ability to accurately classify charge states.

Upon testing the estimator with experimental data, initial results showed effective estimation of most charge states, though some states exhibited higher error rates. To address this, the researchers utilized Grad-CAM visualization to uncover decision-making patterns within the estimator. They identified that errors were often attributed to coincidental-connected noise misinterpreted as charge transition lines. By adjusting the training data and refining the estimator’s structure, researchers significantly improved accuracy for previously error-prone charge states while maintaining the high performance for others.

“Utilizing this estimator means that parameters for semiconductor spin qubits can be automatically tuned, something necessary if we are to scale up quantum computers,” adds Otsuka. “Additionally, by visualizing the previously black-boxed decision basis, we have demonstrated that it can serve as a guideline for improving the estimator’s performance.”

Details of the research were published in the journal APL Machine Learning on April 15, 2024.

- Publication Details:

Title: Visual explanations of machine learning model estimating charge states in quantum dots

Authors: Yui Muto, Takumi Nakaso, Motoya Shinozaki, Takumi Aizawa, Takahito Kitada, Takashi Nakajima, Matthieu R. Delbecq, Jun Yoneda, Kenta Takeda, Akito Noiri, Arne Ludwig, Andreas D. Wieck, Seigo Tarucha, Atsunori Kanemura, Motoki Shiga, and Tomohiro Otsuka

Journal: APL Machine Learning

DOI: